Are you overwhelmed by the vast number of machine learning topics and not knowing where to start or what order to follow?

Then our machine learning course syllabus will give you a structured outline of subjects and topics to learn.

Also, I’ve listed practical machine-learning projects that will improve your learning.

Note: Our machine learning and AI trainers have created this outline after teaching more than 30,000 students all over India through online or offline classes in Vijayawada, Hyderabad, and Bangalore.

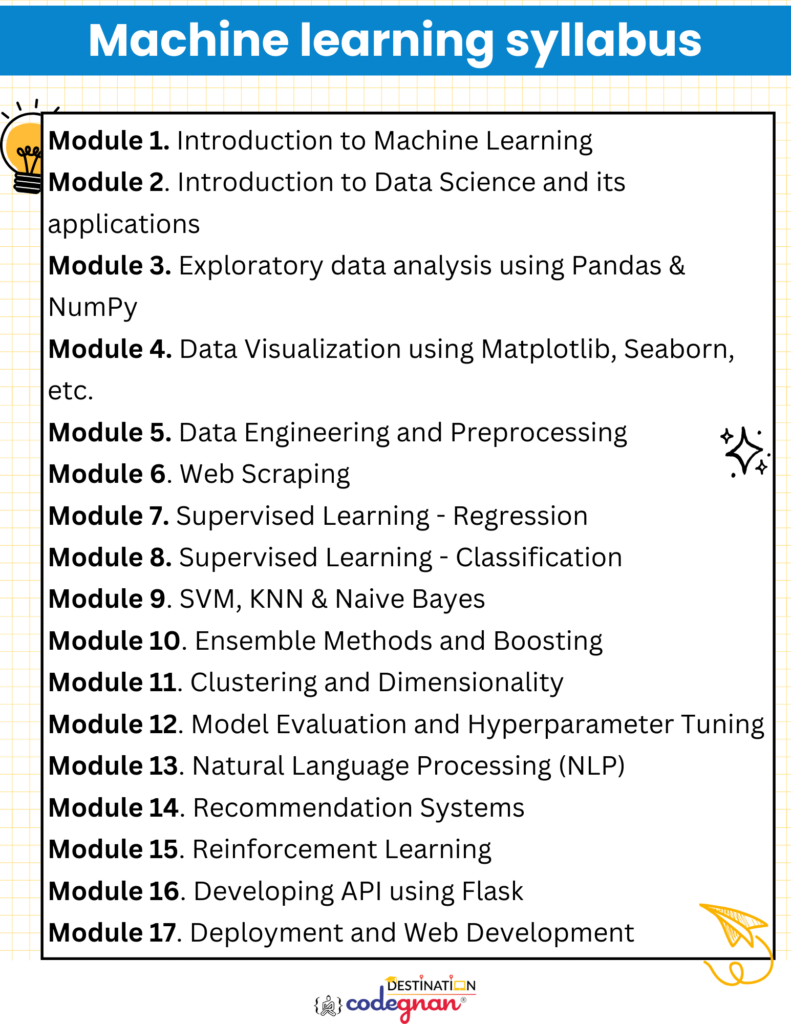

Here’s the course syllabus at a glance:

| Module | Topic |

| 1 | Introduction to Machine Learning |

| 2 | Introduction to Data Science and its Applications |

| 3 | Exploratory Data Analysis (EDA) using Pandas and NumPy |

| 4 | Data Visualization using Matplotlib, Seaborn, and Plotly |

| 5 | Data Engineering and Preprocessing |

| 6 | Web Scraping |

| 7 | Supervised Learning – Regression |

| 8 | Supervised Learning – Classification |

| 9 | SVM, KNN & Naive Bayes |

| 10 | Ensemble Methods and Boosting |

| 11 | Unsupervised Learning – Clustering |

| 12 | Unsupervised Learning – Dimensionality Reduction |

| 13 | Model Evaluation and Hyperparameter Tuning |

| 14 | Natural Language Processing (NLP) |

| 15 | Recommendation Systems |

| 16 | Reinforcement Learning |

| 17 | Developing API using Flask / Webapp with Streamlit |

| 18 | Deployment and Web Development |

Download the Machine learning syllabus (PDF)

👉 Check out our complete syllabus for the machine learning course which is a 1-month duration classroom training course (available in Hyderabad and Vijayawada). Download the complete Machine learning course syllabus PDF.

Complete Machine Learning Syllabus and Curriculum for Beginners

Module 1. Introduction to Machine Learning

Before you get into the complex concepts of machine learning, this part of the course introduces you to machine learning and its types, its workflow, and common data processing techniques. We will also learn to set up the development environment with Python Jupyter and Notebook libraries.

- Introduction to Machine Learning and its types (supervised, unsupervised, reinforcement learning)

- Setting up the development environment (Python, Jupyter Notebook, libraries: NumPy, Pandas, Scikit-learn)

- Overview of the Machine Learning workflow and common data preprocessing techniques

👉 Find the complete Python course syllabus

Module 2. Introduction to Data Science and its Applications

The next thing you will know is a basic knowledge of data science as it is connected with machine learning. Data science extracts quality data and prepares them to feed machines. This section of the course curriculum will introduce you to data science and its role in different industries, data science life cycle and its key stages, overview of different data types, and the importance of data collection and preprocessing.

- Definition of data science and its role in various industries.

- Explanation of the data science lifecycle and its key stages.

- Overview of the different types of data: structured, unstructured, and semi-structured.

- Discussion of the importance of data collection, data quality, and data preprocessing.

Module 3. Exploratory data analysis (EDA) using Pandas and NumPy

EDA is the first step of any data analysis project, where you collect data, learn it thoroughly, and identify its characteristics and trends. We will use two popular Python libraries, Pandas and NumPy, to perform various EDA functions. This course covers the use of Python libraries in summarizing, filtering and transforming data, learning data cleaning techniques, handling missing values, dealing with outliers, and statistical analysis of data.

- Introduction to Pandas

- Overview of NumPy

- Explanation of key data structures in Pandas: Series and DataFrame.

- Hands-on exploration of data using Pandas to summarize, filter, and transform data.

- Data cleaning techniques, handling missing values, and dealing with outliers.

- Statistical analysis of data using NumPy functions.

Module 4. Data Visualization using Matplotlib, Seaborn, and Plotly

Data visualization is the process of representing data using visual components like graphs, charts, and infographics. This section of the course will introduce you to data visualization techniques that will help you translate complex data into easy-to-understand formats. You will learn about Matplotlib, Seaborn, and Plotly, the three popular Python libraries used in data visualization. It gives you a good understanding of different types of plots, customizing plots with titles, labels, colors, and styles, understanding advanced plotting techniques, and creating interactive and dynamic visualizations.

- Introduction to Data Visualization and its importance in data analysis.

- Overview of Matplotlib

- Exploring different types of plots: line plots, scatter plots, bar plots, histograms, etc.

- Customizing plots with labels, titles, colors, and styles.

- Introduction to Seaborn

- Advanced plotting techniques with Seaborn: heatmaps, pair plots, and categorical plots.

- Introduction to Plotly

- Creating interactive and dynamic visualizations with Plotly.

Module 5. Data Engineering and Preprocessing

Data engineering and pre-processing are interconnected steps that prepare raw data for machine learning. Data engineering focuses on collecting, storing, and preparing data for analysis, and data preprocessing is the last stage of data preparation before feeding it to machine learning models. You will learn them in this section, along with gaining knowledge in data cleaning, transformation, and integration, handling missing values, feature engineering techniques, data scaling and normalization, and dealing with categorical variables.

- Introduction to Data Engineering: Data cleaning, transformation, and integration

- Data cleaning and Handling missing values: Imputation, deletion, and outlier treatment

- Feature Engineering techniques: Creating new features, handling date and time variables, and encoding categorical variables

- Data Scaling and Normalization: Standardization, min-max scaling, etc.

- Dealing with categorical variables: One-hot encoding, label encoding, etc.

Module 6. Web Scraping

Web scraping is the process of extracting quality data from websites using different tools and libraries. When you submit a URL to a web scraper like BeautifulSoup, they extract all or only the required amount of data on the page and then store it in appropriate formats like CSV and JSON. You will learn about different web scraping tools, libraries and ethical considerations in this part of the course. It also covers scraping data from websites, handling different types of data on websites, and storing the scraped data in appropriate formats.

- Introduction to web scraping: Tools, libraries, and ethical considerations

- Scraping data from websites using libraries like BeautifulSoup and requests HTML parsing, locating elements, and extracting data

- Handling different types of data on websites: Tables, forms, etc.

- Storing scraped data in appropriate formats: CSV, JSON, or databases

Module 7. Supervised Learning – Regression

Regression in supervised learning is the technique of understanding the relationship between a dependent and an independent variable. It is used to predict continuous outcomes in predictive modelling. In this section of the course, you will learn about regression in details and its types.

- Introduction to Regression: Definition, types, and use cases

- Linear Regression: Theory, cost function, gradient descent, and assumptions

- Polynomial Regression: Adding polynomial terms, degree selection, and overfitting

- Lasso and Ridge Regression: Regularization techniques for controlling model complexity

- Evaluation metrics for regression models: Mean Squared Error (MSE), R-squared, and Mean Absolute Error (MAE)

Module 8. Supervised Learning – Classification

Another application of supervised learning is classification, where the machine learning model tries to predict the right label for a given input data. This section introduces you to Classification, its types and use cases, along with knowledge of logistic regression, decision trees, and random forests. You will also learn about the evaluation metrics for classification models and the implementation of classification models with the Scikit library.

- Introduction to Classification: Definition, types, and use cases

- Logistic Regression: Theory, logistic function, binary and multiclass classification

- Decision Trees: Construction, splitting criteria, pruning, and visualization

- Random Forests: Ensemble learning, bagging, and feature importance

- Evaluation metrics for classification models: Accuracy, Precision, Recall, F1-score, and ROC curves

- Implementation of classification models using scikit-learn library

Module 9. SVM, KNN & Naive Bayes

SVM, KNN and Naive Bayes are the three popular supervised learning algorithms. You will learn them all from this segment in detail.

- Support Vector Machines (SVM): Study SVM theory, different kernel functions (linear, polynomial, radial basis function), and the margin concept. Implement SVM classification and regression and evaluate the models.

- K-Nearest Neighbors (KNN): Understand the KNN algorithm, distance metrics, and the concept of K in KNN. Implement KNN classification and regression and evaluate the models.

- Naive Bayes: Learn about the Naive Bayes algorithm, conditional probability, and Bayes’ theorem. Implement Naive Bayes classification and evaluate the model’s performance.

Module 10. Ensemble Methods and Boosting

Ensemble methods in machine learning is a powerful approach to combining multiple individual models to create a stronger and accurate predictive model. This course gives you a basic understanding of ensemble methods, evaluation and fine tuning of ensemble models and handling imbalance data sets. You will also learn what boosting technique is and its two popular techniques, XGBoost and AdaBoost.

- AdaBoost: Boosting technique, weak learners, and iterative weight adjustment

- Gradient Boosting (XGBoost): Gradient boosting algorithm, Regularization, and hyperparameter tuning

- Evaluation and fine-tuning of ensemble models: Cross-validation, grid search, and model selection

- Handling imbalanced datasets: Techniques for dealing with class imbalance, such as oversampling and undersampling

Module 11. Unsupervised Learning – Clustering

Clustering refers to the process of grouping multiple data points that are similar in features into one. There are no predefined categorical labels like supervised machine learning, and the algorithms automatically look out for hidden patterns within the data. You will learn more about clustering and its types from this section. Different clustering algorithms that we will know include K-means clustering, density-based spatial clustering of applications with noise (DBSCAN), and evaluation of clustering algorithms.

- Introduction to Clustering: Definition, types, and use cases

- K-means Clustering: Algorithm steps, initialization methods, and elbow method for determining the number of clusters

- DBSCAN (Density-Based Spatial Clustering of Applications with Noise): Core points, density reachability, and epsilon-neighborhoods

- Evaluation of clustering algorithms: Silhouette score, cohesion, and separation metrics

Module 12. Unsupervised Learning – Dimensionality Reduction

Another essential concept of unsupervised learning is dimensionality reduction. This technique is used to create simple and manageable ML models by preserving only the essential features in your data. You will learn how dimensionality reduction works, curse of dimensionality, feature extraction and selection. Additionally, this section gives a basic understanding of PCA (Principal Component Analysis) and its implementation with scikit-learn library.

- Introduction to Dimensionality Reduction: Curse of dimensionality, feature extraction, and feature selection

- Principal Component Analysis (PCA): Eigenvectors, eigenvalues, variance explained, and dimensionality reduction

- Implementation of PCA using scikit-learn library

Module 13. Model Evaluation and Hyperparameter Tuning

Model Evaluation and Hyperparameter Tuning ensure machine learning models perform well. Here, you will learn certain Model Evaluation and Hyperparameter Tuning techniques, including cross-validation and model evaluation techniques, GridSearchCV and RandomizedSearchCV, and model selection and comparison.

- Cross-validation and model evaluation techniques

- Hyperparameter tuning using GridSearchCV and RandomizedSearchCV

- Model selection and comparison

Module 14. Natural Language Processing (NLP)

This section of the curriculum gives you a comprehensive knowledge of NLP which is a technology that trains computers to understand, process and generate human language. You will learn about its applications and challenges, along with certain text preprocessing, text representation, and sentiment analysis techniques.

- Introduction to NLP: Understand the basics of NLP, its applications, and challenges.

- Text Preprocessing: Learn about tokenization, stemming, lemmatization, stop word removal, and other techniques for text preprocessing.

- Text Representation: Explore techniques such as Bag-of-Words (BoW), TF-IDF, and word embeddings (e.g., Word2Vec, GloVe) for representing text data.

- Sentiment Analysis: Study sentiment analysis techniques, build a sentiment analysis model using supervised learning, and evaluate its performance.

Module 15. Recommendation Systems

Recommendation systems use data to predict what people are looking for and suggest relevant products. You will learn two main types of recommendation systems in this section of the course: collaborative filtering and content-based filtering. Besides that, you will also learn about the deployment of recommendation systems and advanced concepts of NLP and recommendation systems.

- Introduction to Recommendation Systems: Understand the concept of recommendation systems, different types (collaborative filtering, content-based, hybrid), and evaluation metrics.

- Collaborative Filtering: Explore collaborative filtering techniques, including user-based and item-based approaches, and implement a collaborative filtering model.

- Content-Based Filtering: Study content-based filtering methods, such as TF-IDF and cosine similarity, and build a content-based recommendation system.

- Deployment and Future Directions: Discuss the deployment of recommendation systems and explore advanced topics in NLP and recommendation systems.

Module 16. Reinforcement Learning

This part of the course introduces reinforcement learning and how it works. Reinforcement learning is where an agent (the decision maker) is trained on labeled data with correct answers. You will also know about Markov Decision Processes, and Q-learning algorithms.

- Introduction to Reinforcement Learning: Agent, environment, state, action, and reward

- Markov Decision Processes (MDP): Markov property, transition probabilities, and value functions

- Q-Learning algorithm: Exploration vs. exploitation, Q-table, and learning rate

- Hands-on reinforcement learning projects and exercises

Module 17. Developing API using Flask / Webapp with Streamlit

This section of the course will introduce you to popular Python frameworks, Flask and Streamlit. You will learn to develop API using Python Flask and Streamlit for the deployment of the Machine Learning model and integration of data preprocessing and the ML model. Additionally, you will get hands-on training in designing a user-friendly web interface.

- Introduction to Flask / Streamlit web framework

- Creating a Flask / Streamlit application for ML model deployment

- Integrating data preprocessing and ML model

- Designing a user-friendly web interface

Module 18. Deployment and Web Development

Deployment and Web Development helps in creating machine learning models and put them in action for real-time purposes. This part of the course will teach learners to build a web application for machine learning models, deployment of such models using AWS and PythonAnywhere.

- Building a web application for Machine Learning models: Creating forms, handling user input, and displaying results

- Deployment using AWS (Amazon Web Services): Setting up an AWS instance, configuring security groups, and deploying the application

- Deployment using PythonAnywhere: Uploading Flask application files, configuring WSGI, and launching the application

Machine Learning course curriculum at a glance

Machine Learning concepts to learn

1. Exploratory data analysis using Pandas and NumPy

Machine learning provides techniques to extract data and then uses different methods to learn from that data and predict future trends. Exploratory Data Analysis (EDA) is the first phase of data analysis. To understand it clearly, we will first learn about Pandas and NumPy. These are the two popular Python libraries that are used in data manipulation and analysis. We will then learn how to summarise, filter, and transform data using Pandas, as well as perform statistical analysis of data using NumPy.

2. Data Visualization using Matplotlib, Seaborn, and Plotly

After data analysis, the next thing to know for machine learning is data visualization. It is the process of representing data in a visual format to understand trends, patterns, and relationships within the data. We will be using three popular Python libraries for data visualization: Matplotlib, Seaborn, and Plotly. Additionally, we will learn different types of plots like line plots, bar plots, and histograms, as well as how to customize these plots with levels, colors, titles, and styles. You will also learn some advanced plotting techniques with Seaborn and create interactive and dynamic visualization with Plotly.

3. Data engineering and pre-processing

Having a clear knowledge of data engineering and pre-processing is essential for machine learning. Data engineering helps in building data pipelines to collect, store and prepare data for machine learning models. Here, you will learn data cleaning, transformation, and integration, as well as checking for missing values, feature engineering techniques, and data versioning. Data preprocessing cleans and prepares the data before feeding them into the model. It includes learning data scaling and normalization techniques and dealing with categorical variables.

4. Web scraping

Web scraping in Machine Learning ensures you gather quality data. It is the process of extracting quality data from multiple websites using special tools and library functions. You need to learn web scraping to ensure you are feeding quality data to your machine-learning models. Here, you will get a step-by-step knowledge of web scraping using BeautifulSoup, collecting quality data, and storing them in appropriate formats like CSV and JSON

5. Supervised and Unsupervised learning

Supervised and unsupervised learning are the two main approaches for training machines.

- Supervised learning

Supervised learning uses labelled data sets to train machines so that they can classify data and predict outcomes accurately. While data mining, this supervised learning can be classified into two categories: Classification and Regression. Classification uses an algorithm to accurately assign test data into specific categories, regression understands the relationship between dependent and independent variables. You will learn them both in this course, along with Naive Bayes, Support Vector Machine (SVM), and a few more essential concepts.

- Unsupervised learning

Unsupervised learning uses algorithms to analyze and cluster unlabeled data sets to discover hidden patterns or data groupings without human intervention. Here, you need to learn about clustering and dimensionality reduction. It includes learning K-means Clustering, Principal Component Analysis (PCA), and implementing PCA using scikit-library.

6. Natural Language Processing

You must know NLP, which is a machine-learning technology that enables computers to interpret, manipulate, and comprehend human language. It helps companies to analyze the intent or sentiment in a large volume of messages and respond in real-time. You will learn about different text preprocessing techniques like tokenization, stemming, and stop word removal, as well as text representation techniques like Bag-of-Words and word embeddings and sentiment analysis techniques.

7. Recommendation systems

You need to learn about recommendation systems in machine learning. Your machines are trained to identify your consumer preferences and offer them recommendations for relevant products. The two main types of recommendation systems you will learn are collaborative filtering and content-based filtering.

8. Reinforcement learning

Reinforcement learning is used by machines to understand the best possible path they should take in a particular situation. You need to learn it to train machines like humans through trial-and-error methods. There are multiple other things you need to learn, including how reinforcement learning works, Markov Decision Processes (MDP), and Q-learning algorithm.

Become Machine Learning expert in 1 month with codegnan

Codegnan offers a comprehensive machine learning course with Python that is designed for everyone, from beginners to IT professionals.

What makes us different?

- Course fees: 7,999 (available for a limited time)

- Course duration: 1 month

- Theoretical knowledge + hands-on training classes

- Work on multiple live projects to face real-world challenges

- Delivered by students from top universities and professionals presently working in the domain

- Receive industry-accredited certificates on successful course completion

- Both online and offline (Hyderabad, Vijayawada, and Bangalore) classes available for students

- Trusted by 30,000+ students globally

- Codegnan learners are placed in 1250+ top companies

- Get placement assistance with our Job acceleration program

👉 Enroll in Codegnan’s classroom training:

FAQs

What is the Machine Learning course fee?

The Machine Learning course fee is ₹7,999 at Codegnan. However, this fee structure can vary with other institutions due to multiple reasons.

The course fee of any Machine Learning training program depends on the course curriculum, trainers’ experience, availability of practical and theory classes, working on live projects, and availability of additional facilities like placement assistance, interview preparation, resume building, etc.

Is the Machine Learning course very difficult?

The Machine learning course can be a little difficult as you need to understand multiple concepts, from data visualization and types of machine learning algorithms to Natural Language Processing and web development. But, implementing machine learning isn’t hard as the coding is easy, and Python libraries and packages do the most job.

What is the best way to learn the Machine Learning?

The best way to learn machine learning is to gain a practical understanding of Python libraries and knowledge of basic mathematics. Once you understand ML concepts, you can apply your skills to multiple projects. This clears all your doubts and prepares you for real-life challenges.

Sairam Uppugundla is the CEO and founder of Codegnan IT Solutions. With a strong background in Computer Science and over 10 years of experience, he is committed to bridging the gap between academia and industry.

Sairam Uppugundla’s expertise spans Python, Software Development, Data Analysis, AWS, Big Data, Machine Learning, Natural Language Processing (NLP) and more.

He previously worked as a Board Of Studies Member at PB Siddhartha College of Arts and Science. With expertise in data science, he was involved in designing the Curriculum for the BSc data Science Branch. Also, he worked as a Data Science consultant for Andhra Pradesh State Skill Development Corporation (APSSDC).